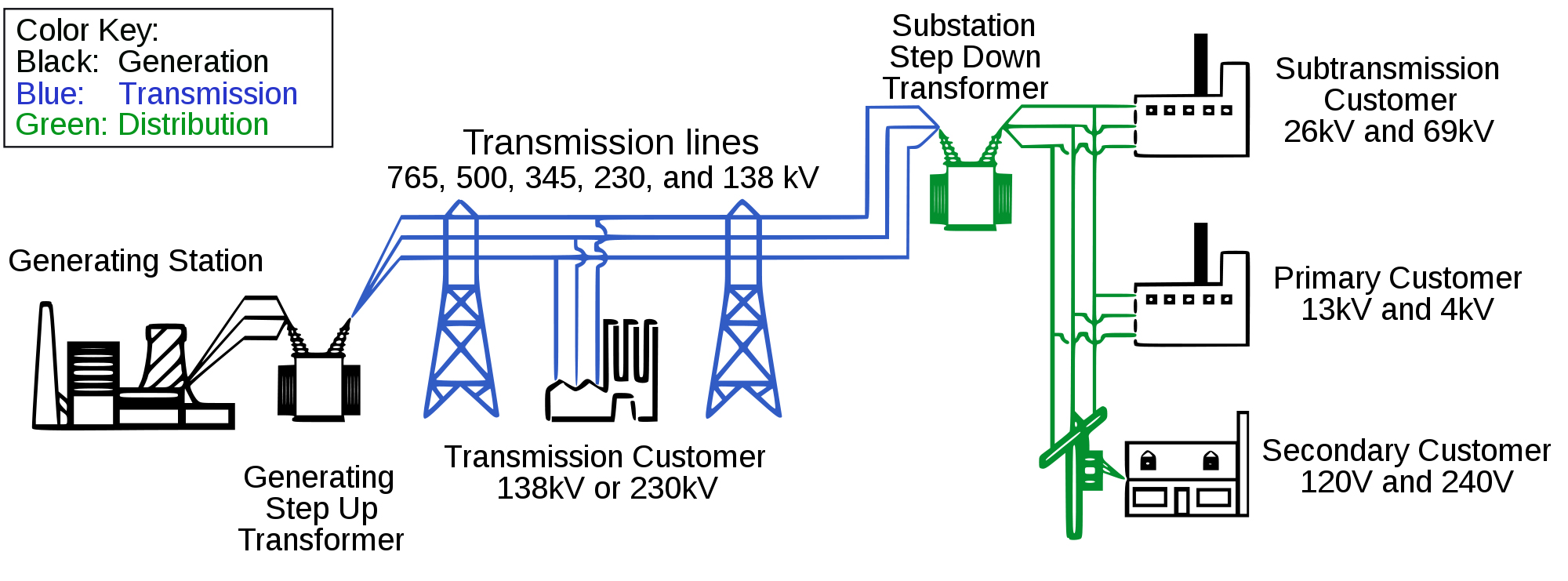

This is the first of two guest posts on the topic of smart electric grids in the United States (although many other countries are wrestling with the same issues). The policy issues require some understanding of the engineering, so I'll start there. A simplified diagram showing the basic structure of the overall electric grid appears to the left. Substation transformers are the natural dividing point for the discussion. This post is concerned with the smart grid as the term is applied to the portion of the grid to the left of the substation transformer: large-scale generation and transmission. The second post will be concerned with the portion to the right of the substation: distribution, consumers, and small-scale generation. Some of the grid problems are easier to understand in the context of transmission, so I'm starting there. The power industry uses an incredible array of acronyms; I'll try to avoid using too many of them.

The US transmission network is a hodge-podge of 300,000 kilometers of lines operated by 500 different companies. A simplified representation that doesn't include many of the minor links is shown here. Like Topsy, it "just growed." In the beginning there were vertically-integrated utilities each with its own generating, transmission and distribution systems. In time, and for a variety of reasons, the utilities interconnected their private networks. One of the reasons was reliability. If utility A had a generator break down unexpectedly, and lacked idle generating capacity of their own, they could buy power from connected utility B who did have idle capacity until the problem was fixed. The Northeast Blackout of 1965 convinced the utilities that such ad hoc reliability arrangements were insufficient and they created the nonprofit North American Electric Reliability Corporation (NERC), which begat various regional reliability organizations, all charged with making the grid more reliable.

Did this fix the problems? On the afternoon of September 8, 2011, portions of the southwestern United States and northwestern Mexico experienced an electricity blackout. The blackout began when a field technician made a procedural error that took a major 500,000 volt transmission line out of service. Over the next 11 minutes, a classic "cascading failure" event knocked out power for nearly seven million people. The government's post-mortem analysis (sizable PDF) identifies a variety of problems. The grid was poorly instrumented, poorly modeled by the control system, operated near maximum capacity too much of the time, the controllers for the different parts of the network didn't communicate quickly or well... To someone who spent part of a career designing high-reliability distributed software systems, it reads like a system intended to fail [1].

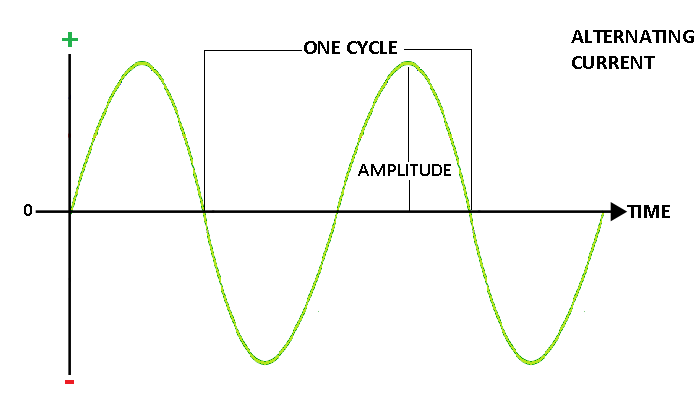

Some (simplified) background science and engineering is necessary here. The first reason managing the transmission network is a hard problem is scale. The US grid consists of three largely independent parts. The Eastern Interconnect, the largest of the three, covers 36 states and parts of Canada from the Atlantic Ocean to the Great Plains. It is a synchronous alternating-current (AC) network. AC electricity — the stuff in the outlets in your walls — varies the voltage from positive to negative and back 60 times each second. The voltage rise and fall over time follows the precise shape (a sine wave) shown here. The output from every generator attached to the Eastern Interconnect must move up and down at exactly the same time. Most generators involve very large rotating masses that don't like to change speed very quickly. If a generator gets out of sync with the rest of the grid, Bad Things happen to it [2].

Another reason that reliability is a hard problem is the nature of electricity. If a generator or transmission link fails, the loads on other components of the network change almost instantly. Where the load will shift is determined by the network's complex topology (see map above). Exciting things can happen when such a shift occurs: much of the added load may fall on a few individual components, possibly overloading them; the flow of power over a particular transmission line may reverse; for a brief period of time, until the generators have adjusted to the new configuration — slowly in comparison to the speed the network loads change — the quality of that sine wave shown above may go to hell in some locations. That last one can be a serious problem. If the quality degrades enough, one or more generators may disconnect themselves from the network in order to avoid the Bad Things mentioned above. Of course, that results in another major change in how the network is loaded, which may take other components out of service, which... cascading failure and millions of people lose power.

On a somewhat different note, over the last 30 years, we have started to demand more from the transmission network than simply reliable transport within an integrated utility or small group of such utilities. In 1978, the Public Utilities Regulatory Policies Act (PURPA) required utilities to begin buying power from independent generating companies when the offered price was cheaper than the utility's cost to generate power. In the 1990s, the Federal Energy Regulatory Commission (FERC) separated operation of regional transmission grids from the utilities that owned them for substantial parts of the country. As a result of these changes a large number of new independent generators, most of them relatively small, got into the business. These changes are often referred to as the deregulation of the wholesale electricity business. "Reregulation" would be a better term, as the business is still heavily regulated, only under a different set of rules, and with different goals.

Many of these new generators use either renewable resources or co-generation. Co-generation is basically applying waste heat from some industrial process to make steam and produce electricity [3]. For example, for years lumber and paper mills have burned the scrap from timber (bark, small limbs, sawdust, needles/leaves) that they can't use in their principal products to make electricity for their own use; the legal changes made it possible for them to sell any excess to the utilities. Both co-generation and renewable power sources are intermittent on some time scale. Solar has that pesky day/night thing; there are hours and days when the wind doesn't blow, sometimes unexpectedly; hydro is often seasonal; lumber mills don't operate all day every day. Intermittent sources make the problem of managing the transmission grid even more difficult; in effect, partial "failures" become more common [4].

Making the correct decisions to manage the failure of generators and transmission lines requires a "big picture" view of the network. For example, when a transmission line fails, disconnecting the right couple of substations to shed load in exactly the right places may keep the network up and stable: exchanging a limited blackout for a much more widespread one. For the transmission network, the term "smart grid" refers to adding all of the things necessary to acquire and make use of the big picture. Large numbers of sensors would be added to the network, all monitoring the quality of the local sine wave, and reporting on its condition many times per second. Individual generators and substations connected to the transmission grid would report their status once every few seconds. All of the data would be collected and processed in real time. The big picture that emerges from all of that would identify failures almost instantly. Given knowledge of the current state of the network and exactly what kind of failure has occurred, a controller can take appropriate actions. For a network the size of the Eastern Interconnect, human controllers are too slow; the job would have to be turned over to software.

So, why hasn't all of this happened already? Blackouts are, after all, things that we would like to avoid. People die in blackouts. Millions of dollars worth of food spoils. Transportation systems come to a standstill. Municipal water systems are contaminated when the pumps fail, and have to be flushed. There seem to be three particular reasons why we don't already have a smart transmission network.

- Cost. Estimates of the cost to deploy the necessary components and control systems across the country run as high as $100 billion. Note in passing that the initial event that led to the Northeast Blackout of 2003 (55 million or so people without power in the US and Canada) was a transmission line coming in contact with a tree. The company that owned the line was too cheap to keep the trees properly trimmed back. How likely is it that they will willingly pay for expensive sensors?

- Security. Recent history suggests that industrial control systems are subject to hacking by outsiders. The more centralized control of the transmission network becomes, the greater the damage that an intruder can do. Long ago, when I worked for the Bell System, we largely resolved the outside hacker problem by building a control network that was physically separate from the public network. It was an expensive undertaking; see "Cost" above.

- Control. Generators, substations, and transmission lines are valuable assets. Their owners are understandably reluctant to give up control of the decision on when those assets should be disconnected from a network going through a cascading failure event to protect them from harm. "The idiots in Cleveland didn't disconnect us soon enough!" is not an explanation for a $50 million generator shaft in the ravine on the far side of the parking lot that anyone wants to deliver to their Board of Directors.

I'm going to spend the last bit of this post explaining why I don't think spending on a smart transmission network at this point in time is not the best use of the money. The problem that a smarter grid doesn't solve is that the US operates portions of its transmission network too close to capacity too much of the time. Making the transmission grid smart is in very large part about trying to cope with the problems that occur when the network is under heavy stress. When the grid isn't stressed, it doesn't need to be nearly so smart. It is not surprising that the major non-weather-related blackouts occur where they do. FERC requires the Department of Energy to conduct periodic studies of the transmission network and identify critical congestion areas. The 2006 study identified two such areas: Southern California and the Mid-Atlantic Coast, shown in the accompanying figure. The 2009 study identified the same areas, and when the 2012 study is published it will no doubt identify the same areas again.

To put it simply,

the US grid would be better served in the near term

by spending money in order to add

a bunch of stupid bulk transmission capacity,

starting in the regions identified as critically congested,

than on an expensive smart grid project.

Besides,

adding capacity is something that we're going to have to do anyway.

To pick an example:

if the Western Interconnect region is going to make much heavier use of renewable power,

it will need to substantially beef up its capacity for long-distance

electricity transport.

Northwestern hydro,

Great Plains wind,

Southwestern solar, and

Great Basin geothermal (if that works out)

will all need to be moved in large quantities all over the West

in order to match supply and demand,

and into Southern California in particular.

The need for additional transmission in Southern California

has become even more pressing now that

SoCal Edison has decided to retire rather than repair

the San Onefre nuclear plant.

[1] More disturbing is that if you go back a little farther in time

and read the post-mortem report on the

Northeast Blackout of 2003 —

which affected 55 million people in the US and Canada —

almost exactly the same "designed to fail" problems

were present.

It looks disturbingly like no one learned anything

between one major failure and the next.

[2] A friend told me about an incident where a generator began to vibrate,

a common symptom when the generator and network are out-of-sync.

The multi-ton main shaft,

rotating at several hundred RPMs,

broke free from the structure holding it in place.

The shaft came loose,

broke through the protective steel enclosure,

through the concrete wall into the next section of the power plant,

through the concrete wall at the end of that section,

bounded across the parking lot, and

wound up in the ravine.

[3] The very large majority of US power is generated by the process of

(1) burn something;

(2) use the heat to boil water to make steam; and

(3) use the steam to spin a turbine to turn a generator.

Hero of Alexandria,

the ancient Roman credited with building the first recorded steam turbine,

would understand the basic principles.

[4] Conventional power plants such as a coal-fired generator

are also intermittent,

but are mostly offline according to a schedule that is known in advance.

For example,

"We'll be offline for the first two weeks of November for annual maintenance."

For reliability planning,

the authorities in Texas almost completely discount wind generators

because the owners can't say, "Yes, we can generate full power on Tuesday next week."